Hi, y’all!

Last week no events to visit. I went to the AI exhibition “Photography Through The Lens of AI” in the photo museum in Amsterdam (FOAM), which was nicely divided into four chapters; Missing Body (exploring forms and how AI is represented), Missing Person (what does it mean AI can substitute humans), Missing Camera, AI is creating events etc that never took place, and Missing Viewer: no need for humans, AI can live on its own. Not all the work was super good, but it was definitely worth the visit. Without planning to, it connected rather well to the triggered thoughts of last week on “sensors impersonating cameras to recreate reality”.

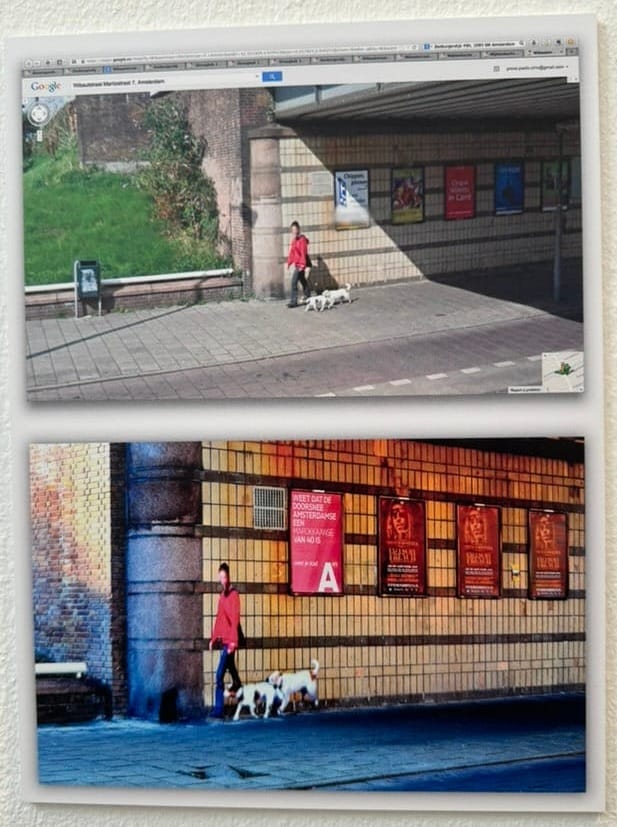

One project (Street Ghosts) from the parallel exhibition on art project by Paolo Cirio was a kind of reversed version of the lovely James Bridle’s project Render Ghosts: The artist took the blurred people in Google Streetview pictures and printed these full-size back into reality at that location. See below.

A project showed the shadows of people without the person, which the AI removed. I wondered what would happen if you fed the AI images of people (or objects), not the real people but only the shadows. Would the AI be able to create interesting originals from those shadows?

Among this one is on my endless reading list. You know about bias in AI, but she had the right examples, and it was a pleasant conversation.

Triggered thought

Watching this short documentary “The secret robot that will disrupt fashion” on a new way of clothing production made me think about different lenses on generative things; the theme we have developed for TH/NGS 2024 conference is all about formulating questions about things that become like co-performing partners. They are starting conversations with us to generate new and possible unexpected output.

The Vega factory portrayed in the documentary is creating a way to weave yarns into clothing pieces that are much more efficient and custom-made. Sometimes, it is like taking out the middle machines that we need and going directly from demand to production.

One thought is what the role of design will be in this case. Will we have a form of co-design where the order of design and making is shifted? Will the designer partner with the consumer to create the garment directly, but with the help of a designer who has special capabilities? Reversed co-design?

Another thought here is how generative AI will find its place. It will not only translate the human body into a readable protocol for the machine to print the garment you use but also become like that designer creating new variations and new combinations. Will the machine based on hidden possibilities create a new form of clothing? Will it create a jumpsuit instead of different pieces in a suit, or a different separation from bottom pieces (jeans) to tops, going left and right parts?

We see that there is a different relation with the machine. And a different deconstruction of tasks done by machines and humans. What if there is a production line in every city, instead of a shop for clothing, there is a machine that you can send orders to? Will there be workshops organized to create your personal clothing together with others and professional designers? Does that workshop replace the shopping? And of course, there will still be variations on what we see. We will have runways and fashion designers that inspire with totally different design methods. There will be fashion trends. This is a kind of immersive layer that is not made tangible before we create the custom “printed” piece.

Troy Nachtigall is researching the relation of robotic machines for garment production is topic of research at Wearble Data Lab by Troy Nachtigall. Is this happening already and how far is this developed? What is the impact of new generative AI to a market system like fashion?

Read the full newsletter here, with

- Notions from last week’s news on Human-AI partnerships, Robotic performances, Immersive connectedness, and Tech societies

- Paper for the week

- Looking forward with events to visit

Thanks for reading. I started blogging ideas and observations back in 2005 via Targetisnew.com. Since 2015, I have started a weekly update with links to the news and reflections. I always capture news on tech and societal impact from my perspective and interest. In the last few years, it has focused on the relationship between humans and tech, particularly AI, IoT, and robotics.

The notions from the news are distributed via the weekly newsletter, archived online here. Every week, I reflect more on one topic, a triggered thought. I share that thought here and redirect it to my newsletter for an overview of news, events, and more.

If you are a new reader and wondering who is writing, I am Iskander Smit. I am educated as an industrial designer and have worked in digital technology all my life. I am particularly interested in digital-physical interactions and a focus on human-tech intelligence co-performance. I like to (critically) explore the near future in the context of cities of things. And organising ThingsCon. I call Target_is_New my practice for making sense of unpredictable futures in human-AI partnerships. That is the lens I use to capture interesting news and share a paper every week.

Feel invited to reach out if you need some reflections; I might be able to help out!